A year of continuous shifts within the sector — and familiar debates beyond it

As we close out 2025, the milestones of the past twelve months underscore just how quickly the industry is shifting beneath our feet. DeepSeek’s breakthrough reshaped assumptions about compute efficiency and cost; NVIDIA’s announcement of Blackwell Ultra signaled yet another leap in accelerator performance; the White House’s AI Action Plan formalized the policy stakes around national compute capacity; Stargate’s Abilene facility began operating at unprecedented scale, becoming a symbol of the AI‑era mega‑campus; debates around AI circular investments highlighted both the ambition and fragility of capital flows into frontier infrastructure — only to name a few of key milestones of this past year

These developments set the stage for a year that will balance continuity with disruption. For vendors and operators, 2026 will bring meaningful shifts in technologies, architectures, and competitive dynamics. Yet from the outside, the narrative may feel familiar. The same themes that began surfacing more prominently in recent years — and defined public debate throughout 2025 — will continue to dominate headlines, even as the underlying infrastructure evolves at a far faster pace.

What we’re not predicting — because everyone else already is

Power scarcity remains the defining constraint, with power availability continuing to be the single most important determinant of site selection for data center projects. Speculation about an AI‑driven investment bubble is expected to intensify, as trillions of dollars in critical infrastructure are deployed amid lingering uncertainty about long‑term monetization models. And public visibility of the sector will keep rising, bringing sharper community pushback, permitting resistance, and societal concerns ranging from energy affordability to the impact of AI on jobs, as well as growing scrutiny over the safe and responsible use of AI, particularly among young people — pressures that intensify most as the industry lacks coherent, accessible, and positive messaging about its value to communities and the broader economy.

Because these forces are so obvious and so deeply embedded in the industry’s trajectory, we will not include them among our predictions. Instead, this outlook focuses on the emerging dynamics that will shape vendors, operators, and the broader ecosystem in ways both expected and unexpected.

The easy ones: our highest-confidence expectations for 2026

These trends are already well underway, with early signals evident throughout 2025, reinforcing a trajectory that leaves little doubt about their momentum heading into 2026.

1. Consolidation and partnerships accelerate

The complexity of gigawatt‑scale data centers is pushing vendors to work together more closely, driving a surge in strategic partnerships that combine expertise across power, cooling, controls, and integration. Expect more joint reference architectures, co‑engineered solutions, and collaborative designs that extend well beyond any single vendor’s historical domain. We anticipate at least ten additional partnership announcements in 2026 as vendors align to meet the growing demands of AI‑era infrastructure.

In parallel, consolidation will continue as vendors with differentiated capabilities become acquisition targets — particularly in high-priority areas such as liquid cooling, solid-state power electronics, and global design and service expertise. These acquisitions will further accelerate the shift toward full-stack delivery models, with integrated chip-to-rack, rack-to-row, and row-to-hall solutions becoming a defining competitive strategy. We expect no fewer than five acquisitions or take-private transactions crossing the $1 billion threshold, underscoring the intensifying race to secure critical capabilities across the DCPI stack.

2. Real builds matter more than bold visions (and vanished ones)

Multi‑billion‑dollar and multi‑gigawatt campus announcements might continue to dominate headlines, but the center of gravity will shift toward execution rather than ideation. Operators will focus on translating these bold visions into reality — securing power, navigating permitting, sequencing construction, and commissioning facilities on time.

With the running backlog of public announcements now exceeding 70 GW of stated capacity, a meaningful share of these projects is likely to remain “braggerwatts” — aspirational declarations that never progress past land options, concept designs, or early‑stage filings. As economic, regulatory, and power‑availability constraints sharpen, attention will shift back to credible projects with clear pathways to completion and well‑defined delivery plans.

Today, several sites are on trajectories that suggest they could eventually cross the fabled 1 GW capacity threshold, but none have reached that milestone yet. By the end of 2026, however, we expect at least five sites worldwide to surpass 1 GW of operational capacity.

3. Divergence grows before convergence returns

Despite efforts toward convergence, 2026 is likely to bring even greater architectural divergence across power and cooling, a proliferation of design pathways rather than a narrowing of them. This is being fueled by rapid technological shifts that show no signs of slowing.

On the power side, even as clarity improves around 400 Vdc and 800 Vdc rack architectures, vendors will diversify rather than narrow their portfolios — developing new families of DC circuit breakers, power shelves, hybrid and supercapacitor‑based energy storage, and MV switchgear integrated with solid-state electronics in preparation for deployments expected in 2028/29.

Cooling will see similar diversification. A testing ground of novel technologies — including two‑phase direct liquid cooling (DLC), CDU‑less single‑phase DLC, and a wide variety of cold‑plate architectures — is expected to gain momentum, expanding the solution diversity of the ecosystem.

In this environment, initiatives like the Open Compute Project (and its collaborations with ASHRAE, Current/OS, and others) will become even more important in steering the industry, offering reference frameworks and shared direction to help channel innovation while reducing unnecessary fragmentation.

Watch closely: trends gaining momentum — but not yet locked in

Early signals suggest these trends could gain real traction — but timing, economics, and scale remain uncertain.

4. “Micro‑mega” edge AI deployments are on the rise

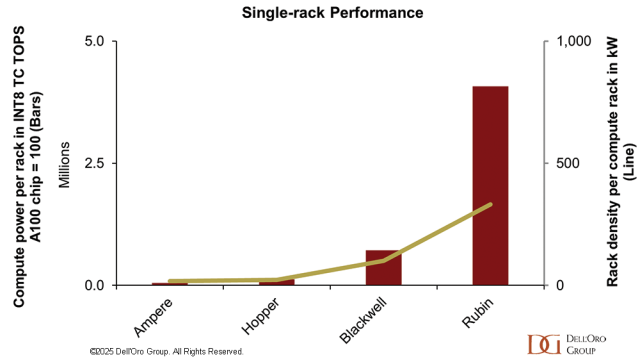

As compute density within a single rack skyrockets, many AI workloads will be able to operate on one — or just a handful — of cabinets. These compact yet powerful clusters will increasingly sit alongside conventional compute to support hybrid workloads. Expect a wave of megawatt-class, ultra-dense AI racks for enterprise post-training and inference — small-scale AI factories — embedded within colocation sites, enterprise campuses, or telco edge facilities.

What makes this shift noteworthy is what it reveals about broader AI adoption: AI is moving beyond pilots and proofs‑of‑concept and into day‑to‑day business operations, requiring right‑sized, high‑density compute footprints placed directly where data and decision‑making occur.

Architecturally, this marks a meaningful shift. Instead of concentrating accelerated compute solely in hyperscale campuses or purpose‑built training clusters, enterprises and colocators will increasingly deploy AI directly into existing facilities. This proximity to business‑critical workflows will drive demand for modular, pre‑engineered AI systems that can be “dropped in” with minimal disruption, along with managed AI‑infrastructure services that oversee monitoring, lifecycle management, and performance optimization.

5. Air cooling strikes back

The novelty of liquid cooling has dominated industry discourse for the past three years, pushing vendors and operators to rapidly adapt — bringing new products to market, redesigning systems to accommodate liquid infrastructure, and upskilling operational teams to support deployments at scale. But as AI deployments move beyond frontier‑model training clusters and into enterprise environments, high‑density AI racks will more frequently appear in facilities not originally designed for liquid cooling.

This shift will prompt a resurgence in advanced air‑cooling solutions. Expect a proliferation of 40–80 kW air‑cooled racks supported by extremely high‑performance thermal systems, paired with 60–150 kW liquid‑cooled racks equipped with liquid‑to‑air sidecars. The result: hybrid thermal profiles within the same facility, introducing complex challenges for operators managing uneven heat loads and airflow dynamics.

Far from being overshadowed by liquid cooling, air‑cooling systems are poised for incremental growth as operators seek flexible, retrofit‑friendly approaches to support heterogeneous rack densities across mixed‑use sites.

6. Immersion cooling re-emerges in modular form

After the hype cycle of recent years, immersion cooling is beginning to find its footing in more targeted, pragmatic applications. Rather than competing head‑on with DLC for hyperscale AI clusters, immersion vendors are shifting toward modular, compact systems that deliver differentiated value.

We expect growing traction in edge, telecom, and industrial environments, where immersion’s sealed‑bath architecture offers advantages in reliability, environmental isolation, and minimal site modification. These deployments will remain modest in scale, but meaningful in carving out a sustainable niche beyond today’s supercomputing and crypto segments.

To be clear, immersion cooling is not poised to displace DLC or become a dominant cooling technology. However, it is finally entering a phase where use‑cases align with its strengths — enabling vendors to build viable businesses around modular, ready‑to‑deploy immersion clusters that “drop in” alongside traditional IT and support workloads that benefit from simplified thermal management and rapid deployment.

7. Europe and China wake up — but in very different ways

Europe and China are both poised for stronger AI‑driven data‑center momentum in 2026, but their trajectories could not be more different. In power‑constrained Europe, growth will increasingly hinge on inference deployments located closer to population centers, to minimize network latency (even if compute latency remains the bigger challenge for AI services). This shift toward user‑proximate infrastructure will steer investment toward distributed, high‑density nodes rather than massive gigawatt-scale training campuses. Within this landscape of smaller facilities, a growing cohort of start‑up model builders will prioritize hyper‑efficient architectures that can extract maximum utility from these distributed fleets, for both inference and selective training workflows.

China, by contrast, faces no shortage of power. Its constraint is access to the latest generation of advanced accelerators. We expect operators to continue building at scale using a mix of domestic silicon and whatever Western supply remains available — iterating rapidly as local manufacturers improve capability generation by generation. Over the next few years, this mix‑and‑match strategy will help China bridge the gap until it achieves greater semiconductor self‑sufficiency, resulting in substantial expansion of AI data‑center capacity even under export controls.

The long shots: unlikely swings with outsized impact

Three low-probability but transformative developments, if they emerge, could reshape the data center landscape far more than their probability suggests.

8. U.S. government tightens regulation of the data center industry

A push in Washington to encourage investment in advanced cooling technologies — including a proposed bill aimed at accelerating liquid‑cooling adoption — could have unintended consequences. While well‑intentioned, efforts to steer technological choices risk drawing the federal government more directly into data center design decisions, increasing oversight and potentially making infrastructure requirements more rigid at a time when flexibility is essential.

We do not expect sweeping regulation to materialize in 2026. The current administration has closely aligned itself with AI as a pillar of economic competitiveness and will be wary of stymieing data center buildout, especially given its role in supporting GDP growth. Moreover, political attention will be dominated largely by the mid‑term elections, leaving little bandwidth for complex industry‑specific legislation.

However, affordability and household cost pressures are set to become highly charged political themes — and in that environment, data centers may attract negative scrutiny. As utilities grapple with rising demand and public concern around bills, the industry could face a wave of unfavorable headlines and heightened calls for transparency. To mitigate reputational risk, operators will need to invest more heavily in public engagement, clear messaging, and proactive demonstration of their contributions to reliability, economic growth, and community well‑being.

9. The first liquid-cooling leak critical failure hits the headlines

The early wave of liquid-cooled deployments often moved faster than the industry’s collective design and operational expertise. Many systems were installed without fully accounting for the nuances of coolant management, materials compatibility, monitoring, and routine maintenance — conditions that naturally elevate leak risk. Throughout 2025, we saw scattered reports of cluster-level shutdowns tied to liquid-handling failures, but nothing approaching the scale or societal visibility of a major cloud outage.

While we still believe high-profile failures are possible, their broader impact will likely be limited. Despite growing enterprise adoption, most AI systems are not yet embedded deeply enough in critical business processes to trigger widespread disruption. As a result, even a significant leak-related outage is unlikely to spark the kind of global headlines seen after the AWS blackout — though it may accelerate industry efforts around standards, training, instrumentation, and risk-mitigation practices.

10. The GPU secondary market skyrockets

As hyperscalers and neo cloud providers refresh their fleets, early generations of GPUs — notably Ampere- and Hopper-based accelerators — will increasingly face retirement to make room for newer, more efficient architectures. This raises a key question already weighing on investors: what is the real depreciation timeline for AI hardware on hyperscaler balance sheets?

We expect most older GPUs to shift into lower‑complexity inference workloads or the training of smaller, less compute‑intensive models. We believe it is still too early for widespread scrapping of full data centers built on these platforms, which could flood the secondary market of GPUs looking for another productive life somewhere else.

Enterprise IT environments and colocation providers will see growing volumes of these second‑hand GPUs entering their ecosystems, often at attractive price points. Integrating these “intruders” into general‑purpose, lower‑density compute environments will introduce new operational and thermal challenges. Operators will need to manage concentrated heat loads, non‑uniform rack densities, and power profiles that differ from their conventional estate.

The bubbling question we can’t avoid — even if we tried

Speculation about an AI “bubble” has increasingly dominated media narratives throughout 2025, and the conversation is unlikely to quiet down in 2026. It is true that many AI‑adjacent companies are trading at lofty valuations, buoyed by optimism around future adoption and monetization, an optimism may not prove durable. There is a meaningful possibility that equity markets enter correction territory in 2026, bringing P/E ratios closer to historical norms.

Yet even in a cooling market environment, we do not expect the data‑center buildout to slow materially. Hyperscalers continue to generate ample cash flow to support aggressive infrastructure expansion, and their balance sheets remain low‑leveraged, giving them capacity to secure additional capital if needed. Strategic imperatives will outweigh short‑term market pressure: these companies are locked in a race to establish AI hegemony — or risk being left behind.

In other words, financial markets may wobble, but the underlying drivers of AI infrastructure investment remain intact. The bubble debate will rage on, but the buildout will continue.

Looking ahead: embracing another year of acceleration and uncertainty

As with every prediction cycle, only time will reveal which of these dynamics take hold and which fade into the background. What is certain, however, is that 2026 will yet again challenge our assumptions. The pace of AI‑driven infrastructure evolution shows no signs of slowing, and the industry will continue navigating a rare combination of technological disruption, supply‑chain reinvention, and unprecedented demand for capacity.

While we avoid grand year‑end platitudes, it is fair to say that much will change — and much will stay the same. Power will remain the currency of competitiveness, AI will continue to push infrastructure to its limits, and operators and vendors alike will be forced to adapt faster than ever. At Dell’Oro Group, we look forward to tracking, analyzing, and interpreting these shifts as they unfold.

Here’s to a 2026 that will undoubtedly keep all of us in the data‑center world busy — and to the insights that the next twelve months will bring!