Table of Contents

ABSTRACT

Broadband access networks are on the verge of a major tipping point, driven by the simultaneous catalysts of the shift to next generation fiber technology and the shift to openness, disaggregation, and automation. The world’s largest broadband providers are quickly realizing that the need for increased throughput is matched by the need for a highly-scalable network that can respond quickly to the changing requirements of the service provider, their subscribers, and their vendor and application partners. The need to provision and deliver new services in a matter of hours, as opposed to weeks or months, holds just as much priority as the ability to deliver up to 10Gbps of PON capacity. Although service providers might have completely different business drivers for the move to open, programmable networks, there is no question that the combination of data center architectural principles and 10G PON technology is fueling a forthcoming wave of next-generation fiber network upgrades.

A Convergence of Speed and Openness

Service providers that are either exploring their first fiber network rollouts or looking to upgrade their first generation networks are doing so in the midst of multiple business and technological shifts. These shifts are all converging at the same time, resulting in the potential for a sea change in how service providers think about and architect their broadband access networks.

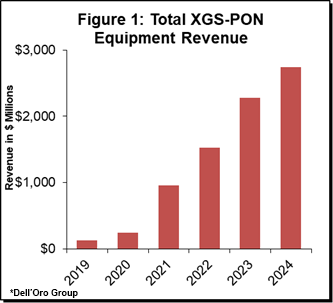

The first of these changes is the most obvious: The widespread focus on 10Gbps as the baseline capacity for upcoming FTTH network buildouts. The first FTTH networks were deployed beginning in the early 2000’s, primarily using first-generation technologies, including BPON, 1G EPON, and 2.5G GPON. Though the latter two technologies continue to provide the backbone of most FTTH networks today, subscribers’ demand for more bandwidth is quickly pushing service providers to move to 10Gbps technologies, including XGS-PON. Dell’Oro Group projects that total revenue for XGS-PON equipment, including both OLTs and ONTs, will grow from just over $121 M in 2019 to $2.7 B in 2024 (Figure 1), as service providers move relatively quickly to expand the bandwidth they can offer.

With cable operators and other ISPs regularly offering 1Gbps speeds to their subscribers, and with MSOs also coalescing around a path capable of 10Gbps downstream offerings through DOCSIS 4.0 and deep fiber, service providers absolutely must have a short-term plan for delivering 10Gbps of capacity. There is no stopping the consistent increase among broadband subscribers for faster speeds and better performance. With 10Gbps PON capacity, service providers can get ahead of the bandwidth consumption curve for a longer period of time, while they focus on integrating open, data center technologies to improve the speed with which they can deliver new services.

The adoption and integration of those open, data center tools and techniques represents the second major technological shift occurring right now. Although it is arguably the most significant change facing service providers, it also represents the biggest opportunity they have to fundamentally alter their broadband access business for the foreseeable future.

The combination of 10Gbps capacities and open data center technologies positions service providers to finally move on from business as usual; that is, the delivery of a static pipe of broadband capacity whose improvements and upgrades reside outside of their control and in the hands of their traditional equipment and silicon suppliers.

The service providers that adopt the combination of 10Gbps PON and openness will be best prepared to accomplish three major goals:

- Deliver the advanced, 10Gbps capacity, and multi-gigabit services subscribers will expect and require using a cloud-native infrastructure that treats bandwidth and the delivered applications as workflows;

- Anticipate and weather rapid increases in traffic demands with a highly-targeted and elastic infrastructure that can be activated without a forklift upgrade;

- Develop an access network infrastructure that can process multiple workloads beyond broadband access, including hosted services that can be offered on a wholesale basis, as well as fixed-mobile convergence applications.

There is one constant across all broadband networks: Regular capacity increases resulting in ongoing network upgrades and expansions. Service providers that move today with the combination of 10Gbps PON technologies and cloud-based architectures will be able to address the consistent capacity growth more quickly and economically. Additionally, these service providers will be able to layer on more services without having to invest in costly equipment upgrades, thanks in part to the abstraction of software drivers and applications from underlying hardware, as well as the containerization of these applications.

Service providers that adopt the combination of 10Gbps PON and cloud-based architectures are also giving themselves the ability to shift their focus from network platforms and connectivity to services and edge-based applications. By disaggregating the application and control planes from the underlying infrastructure, these providers worry less about how the inevitable technology changes will impact their network platforms, as well as their technology suppliers. Additionally, their time-to-market for new services and applications is greatly reduced. In other words, the nexus of control moves from their technology supplier partners to the service providers themselves. That is a critical benefit that will pay dividends down the road for decades.

What Operators Want

No two broadband service providers have the same starting point, nor do they face similar competitive threats. Thus, the drivers and priorities for their shifts to 10G PON and cloud-based infrastructures can be radically different. Depending on their priorities, the paths to implementing these new architectures are also different and can prioritize automation, virtualization, service convergence, or disaggregation.

In developing this paper, we spoke with multiple operators around the world regarding their plans, strategies, and business drivers for deploying 10Gbps capable FTTH in conjunction with their shift to open, cloud-based infrastructure. Together, these operators deliver fixed broadband services to roughly 65 million individual subscribers as of the second quarter of 2020. Their broadband networks include a mix of copper and fiber technologies, running the gamut from ADSL, VDSL, and Gfast to 2.5Gbps GPON. All are in the early stages of adding XGS-PON to their networks and consider this their 10Gbps technology of choice for the next decade.

The addition of XGS-PON and the overall move to open, cloud-based infrastructure, in general, is where nearly all of these operators find agreement. In fact, most agree that the ushering in of a new infrastructure model makes perfect sense coinciding with the transition to higher-speed technologies because both transitions are focused on delivering spare capacity. This spare capacity can be reserved for new applications and services, but also in the case of unusual circumstances, such as those experienced during the COVID-19 pandemic. In those situations, spare capacity can be quickly deployed to ensure telework and remote learning environments can be adequately supported. Two such operators agree that the capacity they will have in their networks as a result of this transformation will be extremely beneficial, but that the transition to 10Gbps access and open, disaggregated infrastructure are coincidental.

Additionally, all of the operators agree on the general principles proposed in specifications such as CORD (Central Office Re-Architected as a Datacenter), SEBA (Software-Enabled Broadband Access), VOLTHA (Virtual OLT Hardware Abstraction), Broadband Forum’s Cloud CO, and SD-BNG (Software-Defined Broadband Network Gateway). Specifically, the shared goals of moving traditional networking equipment into software VNFs (Virtual Network Functions) that could run on data-center-style servers along with the creation of a fully-automated platform that could be coordinated and distributed across multiple physical locations provide the impetus for these projects.

All of these operators agree that they have a need to move from monolithic, hardware-based systems and waterfall engineering approaches to more functional, distributed blocks using a DevOps approach to service development and deployment as well as an abstraction layer to allow for maximum service independence.

For all of these operators, competing in a world where application providers such as Netflix, Facebook, Google, and others can quickly launch a new service or make a refinement in a matter of days or weeks, there is a desire to fundamentally re-design their networks from being tied tightly to proprietary hardware and business processes to being designed for rapid application development through the contributions of both internal and external developers.

At a high level, the general goal is to abstract away vendor-specific implementations from the business layers above, so that the OSS and BSS systems, which have historically been developed by network equipment vendors, are no longer tightly integrated with the underlying network infrastructure. This tight integration of OSS and BSS systems with network infrastructure was by design. Service providers weren’t focused on the rapid deployment of new services and applications. Instead, they were focused on stability, reliability, and the maximization of their network assets.

Of course, that business model has been augmented by the need to straddle both worlds—a world where reliability and stability are just as critical as the need to get services out to subscribers just as quickly as the application providers who rely on their networks. But that can’t be accomplished without the decoupling of the control and management layers from the network elements, which ultimately gives service providers the ability to change out network components and continuously make service modifications and upgrades without the fear of those changes needing to go through a laborious process of integrating with the existing OSS and BSS networks.

Service and network modifications are gated by the network component that requires the longest development cycle or implementation timeline. Typically, the integration with the existing OSS systems is that gating factor and it is why the operators we spoke with were adamant about the need to move to a more open networking architecture. Multiple operators cited the lengthy OSS impact of a single line card change in an existing DSLAM or OLT and how that impact, especially with pending upgrades to 10Gbps across major portions of their networks, would be unsustainable. The introduction and delivery of services across the new infrastructure takes months, because of the time to provision those services on the OSS and BSS systems.

The service providers we spoke with want that process of infrastructure deployment, service creation, and delivery to take a couple of weeks, not months. Again, that is only possible via the decoupling of the underlying network infrastructure from the business process layers above it. One operator noted that the combination of hardware abstraction and the decoupling of network elements from siloed OSS platforms results in network technology finally being ahead of marketing. In other words, the traditional process of marketing defining a service, then tasking the network engineering team with defining the features required for the service, and then working with vendor partners to integrate those features in their next software loads, is flipped on its head. Now, the technology team can provide marketing with a list of containerized services that can be pieced together as part of a larger offering, whether that be hosted parental controls, bandwidth-on-demand, or low-latency upstream boosts for online gaming and VR applications.

End-to-End Automation, Self-Reliance, and Choice are Key Drivers

Throughout our conversations, the operators were quick to point out that their adoption of software-defined access technologies had little to do with virtualization and disaggregation as goals in and of themselves. All the operators noted that there is little value in those concepts from a business standpoint. Instead, it is the capabilities that those architectural concepts yield that provides the impetus for this significant technological transition.

For some of the operators we spoke with, the automation of service and application provisioning is the primary reason for their adoption of open, cloud-based infrastructure along with their shift to 10Gbps fiber technologies. For these operators, the combination of open, programmable network elements and the additional bandwidth prepares them for the next decade of broadband because they will always have spare capacity for new services, and those new services can be provisioned, deployed, and refined almost instantaneously.

But for these operators, that spare capacity would be squandered if they didn’t have an end-to-end automation system in place. For them, the virtualization of network elements is less of a priority than the ability to orchestrate and automate functions of those elements via an SDN (Software Defined Networking) Controller, such as ONOS (Open Network Operating System), or one developed by the operator in-house.

Automation can be a very vague and far-reaching term. But for these operators, automation is specifically the use of software to automate network provisioning by finding the most ideal way to configure and manage a network. It replaces the traditional and painstaking process of using CLI (command-line interface) instructions to program individual network elements. One of the benefits of moving away from CLI instructions is that business processes and service provisioning can be accomplished in more modern, well-known programming languages, such as Python or Java.

Automation can provide an almost immediate reduction in operational costs. It also has proven to be critical during situations such as the COVID-19 pandemic, when stay-at-home mandates forced operators to limit personnel access to Network Operations Centers (NOCs). In those cases, network engineers could automate the provisioning and management of services remotely and continuously update scripts to monitor the health of network elements that saw dramatically increased utilization rates.

Speaking of the pandemic, the operators we spoke with were in general agreement that network automation was a critical enabler for bandwidth-on-demand applications, especially to deliver upgraded bandwidth within an hour or two to remote workers and online gamers. Prior to having some level of network automation, the provisioning of additional bandwidth would have taken multiple days. Also, the need for on-demand bandwidth is matched by the need for constant, daily monitoring of the overall network, as well as the individual network elements, especially when traffic volumes increase significantly on an almost-daily basis.

Two operators we spoke with noted that automation is still imperfect, because of the underlying complexity and diversity of network elements. SDN helps to smooth out some of those imperfections by orchestrating the management of the underlying network elements. One operator mentioned specifically that SDN helped solve one of their biggest hurdles to implementing end-to-end automation: network operating systems being out of sync with the inventory of the network. Many times, this operator would attempt to automate the provisioning of a service, but that process would fail because inventory databases would have incorrect data regarding network elements due to different polling processes. With an SDN orchestration layer, information about the network and the capabilities of underlying elements can remain in sync and always ready for the automated provisioning of a new service. Although SDN isn’t always a prerequisite for automation, it certainly provides a cohesive framework for viewing the network holistically. SDN can define and implement the policies to deal with any inventory issues that might arise. These policies ultimately form the basis of intent-based networking.

Operators differ on the level of virtualization required in the PON OLTs and other access network infrastructure in order to deliver end-to-end automation. For those with existing and widespread PON infrastructure, they have worked with their technology suppliers to provide enough open APIs to allow their SDN controllers to provision and manage them. For these operators, there is a clear separation between the need for virtualization and the need for automation. They don’t want to rip and replace access equipment they only recently deployed simply to support virtualization when automation is their short-term goal. These operators are still moving to 10Gbps PON technology, because of the competition they face from cable operators and other ISPs. However, their short-term virtualization efforts are occurring in the core of their network as well as at the BNG (Broadband Network Gateway), not the access portion. This focus on the core and edge will likely change over time as it becomes time to swap out aging OLTs within the access network.

But for other operators, particularly those that are rolling out greenfield 10Gbps capable FTTH networks, automation remains the immediate goal, but virtualized access infrastructure prepares them for a decade of reduced operational costs, increased network efficiencies, and agility in addressing new markets. In particular, these operators recognize that the network slicing capabilities that will be characteristic of 5G mobile networks will also be a significant feature of fixed broadband networks. With network slicing, broadband providers will be able to offer a range of residential and business services, as well as wholesale and leased access services in areas where that makes sense. With 10Gbps PON providing the capacity baseline, operators can tailor service tiers for end customers who might require extremely low latency, increased security, or increased reliability. Complex network slicing can’t be delivered consistently without end-to-end automation at a minimum and a high degree of network virtualization.

Beyond network slicing, these operators also have designs on advancing their network automation capabilities to include machine learning and artificial intelligence (AI) and, ultimately, deliver intent-based networking. Intent-based networking uses both machine learning and AI to automatically perform routine tasks and respond to platform and network issues to achieve intents and business goals as defined by the operator.

Defining business goals and having enough control over how software and hardware platforms are provisioned and managed to achieve those business goals is what operators ultimately want from their network virtualization and automation efforts. For so long, these operators have felt that their success or failure hinged more upon the software and hardware release cycles of their technology partners than on their own ability to define a service, implement it, and then market and sell it correctly. This self-reliance is a critical driver and optimal outcome of these operators’ virtualization and automation efforts. For them, simultaneously adopting open networking architectures and 10Gbps PON makes perfect sense because these are the technologies they will be relying on for the next decade.

The service providers who value this self-reliance have typically been active contributors to standards bodies focused on open networking platforms and have also developed one or more key components themselves. In particular, these operators have developed their own SDN orchestration and control platforms and publish their APIs and reference designs to allow their vendor partners to facilitate hardware and software integration. The key for these operators is the increased control they have over the prioritization of service and network element onboarding. This is especially critical when testing out a new service or having existing customers migrate over to a new platform. Instead of relying on a complex set of network elements tied together via a series of standardized interfaces and protocols, operators can reduce that complexity and maintain the programming logic across all the platforms in the SDN controller. Functions that are duplicated in network elements, such as routing and policy enforcement, can be abstracted and maintained in the orchestration layer rather than across multiple network elements. By doing this, service providers can introduce new services and network equipment much faster and without the difficulties of trying to scale the services across the entire network of unique hardware platforms.

Operators can choose to completely centralize the orchestration functions or distribute them across multiple mini data centers that are physically separated, but logically connected. These mini data centers can be distributed by region, city, neighborhood, or by a number of served customers. New service and application testing can be isolated to each of these mini data centers, as can network or service migrations. So, the impact of an OLT line card failure, for example, can be isolated to a few hundred customers, rather than a few thousand. With 10Gbps services and the expectation of premium performance and reliability, the isolation and immediate mitigation of faults are critical.

Supplier or supply chain diversification is an additional driver for operators to add more virtualization and abstraction capabilities to their networks. With the vendor landscape consolidating and operators looking to reduce their reliance on a single vendor for operating systems, element management systems, as well as reduce expensive vendor maintenance contracts, there is a desire to build an orchestration layer that allows them to pick and choose network equipment platforms. This is especially the case as operators begin rolling out 10Gbps FTTH services. Operators want to be able to select platforms from OEMs, ODMs, as well as contract manufacturers, depending on their business model and how much systems integration they want to take on themselves. Operators want to define what is required from their hardware suppliers and integrate that equipment quickly into their networks so they can focus on the development of new services or the integration of new services developed by third parties.

Lastly, some operators noted the fact that their trusted equipment vendors were already moving in the direction of automation and SDN, specifically. Their product roadmaps all include elements of both, which basically push operators to move in that direction, as well. All agree that this isn’t a bad thing and is instead a reflection of the industry’s priorities, in general.

Accelerating Operator Transformation

The operators rolling out 10Gbps-based, open, and disaggregated fiber network architectures are clearly committed to transforming their businesses for the next decade. The combination of additional capacity plus the ability to control how that capacity is allocated across a wide range of services positions them to stay ahead of their competition, while also defining internal business goals that can be programmed across the entire network. If energy efficiency becomes a high priority, then the operator can intelligently allocate workloads to less power-hungry platforms. If low latency becomes a high priority, then operators can automate route changes to platforms with higher performance metrics. For operators, control of their own destiny as a business has been a longstanding goal that can now be achieved through automation, virtualization, and disaggregation.

These operators are at the forefront of a larger, multi-year transition to 10Gbps fiber and open networking. Though the two initiatives don’t have to be tied together, a growing list of operators worldwide are realizing that both initiatives advance their competitiveness and long-term viability exponentially. The changes free the operators to focus on the business of creating additional value through the development and introduction of new services, either of their own design or in partnership with third-party providers. 10Gbps capable access networks give them the vehicle, while open programmability gives them the ability to make that vehicle whatever they want it to be—an engine for residential and business service growth or one designed to support fixed-mobile convergence.

As the operators we spoke with have explained, this transformation process will be a lengthy one, impacting not only network infrastructure but also the very culture of their organizations. In the context of broadband access networks, connectivity will take a backseat to service creation—finally. By creating a DevOps environment, operators can experiment and learn about the benefits and impacts of new services on a limited set of live customers. Then, they can take what they’ve learned to quickly harden the service and roll it out across their entire subscriber base.

This DevOps model to accelerate service creation and deployment will take time to institute, but it is the goal of these and other operators, as well as their competitors. Cable operators, for example, are following a similar path of virtualizing key broadband network platforms to create a DevOps environment, but also to help them scale their broadband capacity more efficiently. Cable operators have also defined a path to 10Gbps capacity in their access networks.

Conclusion

For operators, who have found themselves consistently behind their competition in offering gigabit services and dealing with the perception that they are staid providers of connectivity who can’t innovate, the dual transition to 10Gbps capable fiber access and open networking principles provide the catalyst needed to push them ahead. Many operators already understand this formula and others are sure to follow.