The signals are confusing. AI boom, global trade war, supply shortages, competitors merging––it takes focus and lots of analysis to cut through the upheaval in the Local Area Networking market and predict the future trends. Luckily, we have decades of historical data and some finely tuned models to enable us to make these top three predictions for the enterprise networking market in 2026.

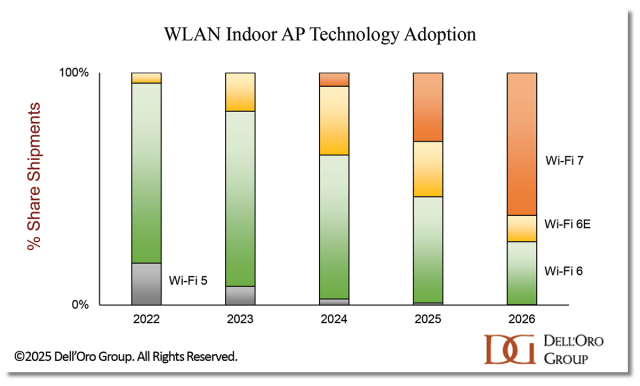

1. Wi-Fi 7 will dominate enterprise wireless connectivity

For some enterprises, the pristine 6 GHz band will be too alluring to resist. Other organizations will be nearing the end of their WLAN equipment’s lifecycle and, seeing a growing number of Wi-Fi 7- capable devices in their ecosystem, will want to future-proof the network. Yet others may be in the midst of a massive digital transformation project, needing the best quality WLAN to carry steady streams of data feeding their digital operations.

Whereas private 5G deployments will also grow, we expect enterprise adoption of private cellular to remain constrained to a niche, high-end portion of the market. Only especially difficult radio conditions, or very tight security and performance requirements, will justify the additional cost and complexity of private cellular. We don’t expect much cannibalization of private cellular gobbling up the WLAN market either. Enterprises that choose private cellular for their operations are focusing on new use cases and will continue to deploy WLAN as well.

There’s no doubt: enterprise-class Wi-Fi 7 will become mainstream in 2026. With no second, enhanced version (like Wi-Fi 5 Wave 2, or Wi-Fi 6E) to dilute the take up, we expect the Wi-Fi 7 adoption curve to become steeper than it was for any other enterprise WLAN technology.

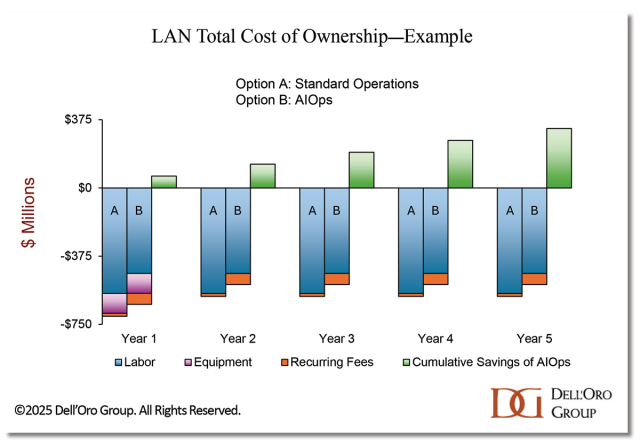

2. The AIOps business case will prove itself in

AI FOMO is rampant. Enterprises see so much potential with AI, but there are associated risks, and it can be difficult to extract tangible benefits. However, many enterprises have already witnessed dramatic results from using Machine Learning to ease the burden of IT Operations; including shorter deployment times, a dramatic drop in the number of trouble tickets, and faster time to problem resolution. Layering in AI capabilities makes LAN management applications easier to use and more accessible across an organization.

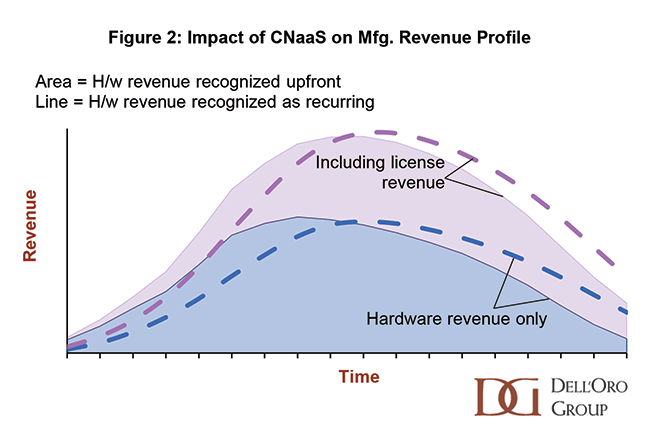

AIOps platforms are available from most of the major enterprise IT vendors. AI and Machine Learning capabilities often have license fees that are recurring in nature, driving up enterprises’ LAN equipment costs. This premium may have dissuaded enterprises from adopting AIOps in the past.

However, over the past few years, vendors have added features and increased the value of those licenses, including 24×7 support bundled into the recurring fee. Now, by paying the equivalent of a fraction of a network engineer’s salary in license fees, a mid-sized enterprise can reduce hours spent on operations and level-one support in order to allocate more of their valuable networking experts’ time to AI projects.

Every enterprise’s business case will be different, but with networking expertise in high demand, we predict that in 2026, the labor savings will outweigh the additional license costs for the majority of mid-to-large sized enterprises.

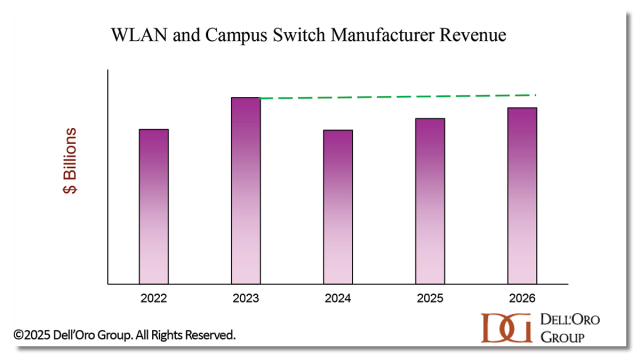

3. 2026 Local Area Networking market will beat $30 B––but still not surpass 2023

The pandemic may seem far away, but the ripple effects are still being felt. After surviving the supply constraints of 2021 and 2022, vendors unleashed a tsunami of WLAN APs and campus switches into the market in 2023, making it the top grossing year of all time for LAN equipment revenue. Following the high volume of shipments, enterprises took time to work through the excess inventory, causing double-digit revenue declines for vendors in 2024. In 2025, the demand-driven market returned, but so has the specter of supply constraints. The AI buildout has caused a shortage of semiconductor components, the most immediate being memory.

The high WLAN and campus switch prices of 2022 and 2023 began to erode in early 2025, but in 4Q25, LAN equipment vendors raised prices to compensate for escalating component costs. Higher prices can dampen demand; however, the need to replace ageing infrastructure will be a counterweight. Enterprises must invest in their LANs in order to modernize their operations, and an upgrade to Wi-Fi 7 requires more switching power and higher bandwidth ports. Weighing the puts and takes, we believe the market will continue to grow in 2026, with vendors applying what they learned during the last supply crunch to avoid the worst in this one. The 2026 market will be well into the $30 B range, but we will need to wait one more year for revenues to beat the highs of 2023. Tune back in at the end of 2026 for more on that prediction.