The use of intelligence in the RAN is not new—both 4G and 5G deployments rely heavily on automation and intelligence to replace manual tasks, automate the RAN, manage increasing complexity, enhance performance, and control costs. What is new, however, is the rapid proliferation of AI and generative AI, along with a shifting mindset toward leveraging AI in cellular networks. More importantly, the scope of AI’s role in the RAN is expanding, with operators now looking beyond efficiency gains and performance improvements, cautiously exploring whether AI could also unlock new revenue streams. In this blog, we will review the scope and progress.

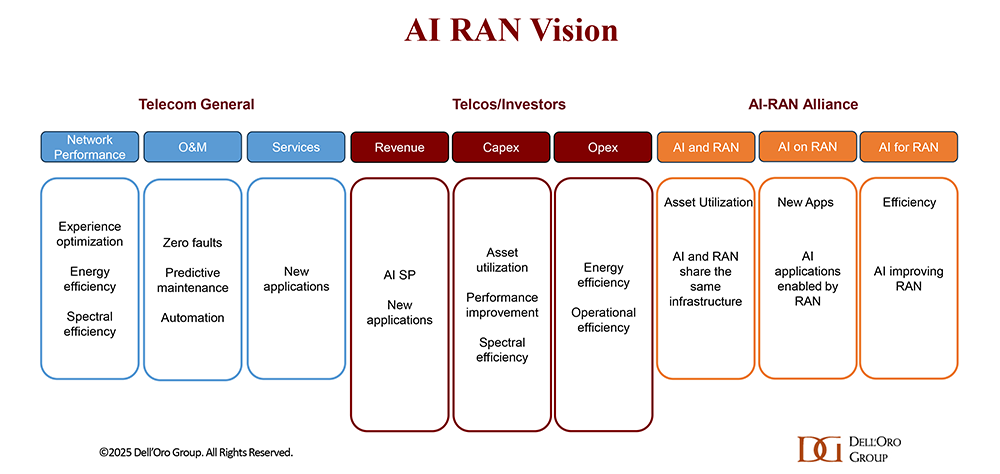

AI RAN Vision

Considering the opportunities with AI RAN, its evolving scope, the proliferation of groups working on AI RAN, the challenges of measuring its gains, and the absence of unified frameworks in 3GPP, it’s not surprising that marketing departments have some flexibility in how they interpret and present the concept of AI RAN.

Still, some common ground exists even with multiple industry bodies (3GPP, AI-RAN Alliance, ETSI, NGMN, O-RAN Alliance, TIP, TM Forum, etc) and key ecosystem participants working to identify the most promising AI RAN opportunities. At a high level, AI RAN is more about efficiency gains than new revenue streams. There is strong consensus that AI RAN can improve the user experience, enhance performance, reduce power consumption, and play a critical role in the broader automation journey. Unsurprisingly, however, there is greater skepticism about AI’s ability to reverse the flat revenue trajectory that has defined operators throughout the 4G and 5G cycles.

The 3GPP AI/ML activities and roadmap are mostly aligned with the broader efficiency aspects of the AI RAN vision, primarily focused on automation, management data analytics (MDA), SON/MDT, and over-the-air (OTA) related work (CSI, beam management, mobility, and positioning).

The O-RAN Alliance builds on its existing thinking and aims to leverage AI/ML to create a fully intelligent, open, and interoperable RAN architecture that enhances network efficiency, performance, and automation. This includes embedding AI/ML capabilities directly into the O-RAN architecture, particularly within the RIC/SMO, and using AI/ML for a variety of network management and control tasks.

Current AI/ML activities align well with the AI-RAN Alliance’s vision to elevate the RAN’s potential with more automation, improved efficiencies, and new monetization opportunities. The AI-RAN Alliance envisions three key development areas: 1) AI and RAN – improving asset utilization by using a common shared infrastructure for both RAN and AI workloads, 2) AI on RAN – enabling AI applications on the RAN, 3) AI for RAN – optimizing and enhancing RAN performance. Or from an operator standpoint, AI offers the potential to boost revenue or reduce capex and opex.

TIP is actively integrating AI/ML into its Open RAN vision, focusing on automating and optimizing the RAN using AI/ML-powered rApps to manage and orchestrate various aspects of the network, including deployment, optimization, and healing.

While operators generally don’t consider AI the end destination, they believe more openness, virtualization, and intelligence will play essential roles in the broader RAN automation journey.

What is AI RAN

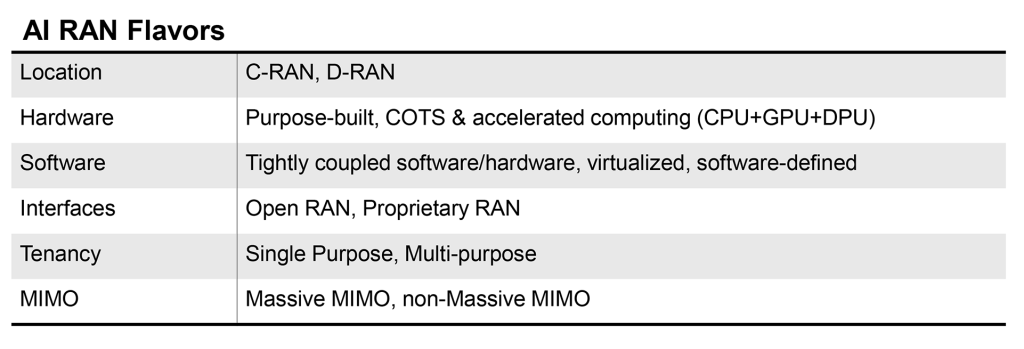

AI RAN integrates AI and machine learning across various aspects of the RAN domain. For the broader AI RAN vision, the boundaries between infrastructure and services are not clearly defined, and interpretations vary. The underlying infrastructure (location, hardware, software, interface support, tenancy) varies depending on multiple factors, such as the latency and capacity requirements for a particular use case, the value-add of AI, the state of existing hardware, power budget, and cost.

AI-RAN, aka the AI-RAN Alliance version of AI RAN, is a subset of the broader AI RAN opportunity, reflecting AI RAN implementations utilizing accelerated computing and fully software-defined/AI-native principles. AI-RAN enables the deployment of RAN and AI workloads on a shared, distributed, and accelerated cloud infrastructure. It capitalizes on the demand for AI inferencing and converts the RAN infrastructure from a single-purpose to multi-purpose cloud infrastructure (NVIDIA AI-RAN Paper, March 2025).

While the ideal reference solution is AI-native/Cloud-native, AI RAN can be offered until that vision is achieved. The majority of the AI RAN deployments to date are implemented using existing hardware.

Why integrate AI and RAN

With power and capex budget requirements rising on the RAN priority list, one of the fundamental questions now is where AI can add value to the RAN without breaking the power budget or growing capex. It is a valid question. After all, RAN cell sites have been around for 40+ years, and the operators have had some time to fine-tune the algorithms to improve performance and optimize resources. AI can make sense in the RAN, but given preliminary efficiency gains, it will not be helpful everywhere.

Topline growth expectations are muted at this juncture. However, operators are optimistic that integrating AI and RAN will produce a number of benefits:

- Reduce opex/capex

- Improve performance and experience

- Boost network quality

- Lower energy consumption

AI can help introduce efficiencies that help to lower ongoing costs to deploy and manage the RAN network. According to Ericsson, Intelligent RAN automation can help reduce operator opex by 60%. AI will play an important role here, accelerating the automation transition, simplifying complexity and curbing opex growth. Most of the greenfield networks are clearly moving toward new architectures that are more automation-conducive. Rakuten Mobile operates 350 K+ cells with an operational headcount of around 250 people, and the operator claims an 80% reduction in deployment time through automation. China Mobile reported a 30% reduction in MTTR using Huawei’s AI-based O&M. Nokia has seen up to 80% efficiency gain in live networks utilizing machine learning in RAN operations.

The RAN automation journey will likely take longer with the existing networks. The average brownfield operator today falls somewhere between L2 (partial autonomous network) and L3 (conditional autonomous network), with some way to go before reaching L4 (high autonomous network) and L5 (full autonomous network). Even so, China Mobile recently reported it remains on track to activate its L4 autonomous networking on a broader scale in 2025. Vodafone is exploring how AI can help to automate multi-vendor RAN deployments, while Telefonica is implementing AI-powered optimization and automation in its RAN network. According to the TM Forum, 61% of the telcos are targeting L3 autonomy over the next five years.

AI can help improve the RAN performance by optimizing various RAN functions, such as channel estimation, resource allocation, and beamforming, though the upside will vary. Recent activity shows that the operators can realize gains in the order of 10 to 30% when using AI-powered features, often with existing hardware. For example, Bell Canada, using Ericsson’s AI-native link adaptation, increased spectral efficiency by up to 10 percent, improving capacity and reliability of connections, and up to 20 percent higher downlink throughput.

Initial findings from Smartfren’s (Indonesia) commercial deployment of ZTE’s AI-based computing resulted in a 15% improvement in user experience. There could be more upside as well. DeepSig, demonstrated at MWC Barcelona, its AI-native air interface, OmniPHY, running on the NVIDIA AI Aerial platform, could achieve up to 70% throughput gains in some scenarios.

With the RAN accounting for around 70% of the energy consumption at the cell site and comprising around 1% to 2% of global electricity consumption (ITU), the intensification of climate change, taken together with the current power site trajectory, forms the basis for the increased focus on energy efficiency and CO2 reduction. Preliminary findings suggest that AI-powered RAN can play a pivotal role in curbing emissions, cutting energy consumption by 15% to 30%. As an example, Vodafone UK and Ericsson recently showed on trial site across London that the daily 5G radio power consumption can be reduced by up to a third using AI-powered solutions. Verizon shared field data indicating a 15% cost savings with Samsung’s AI-powered energy savings manager (AI-ESM), Similarly, Zain estimates that the AI-powered energy-saving feature provided by Huawei can reduce power consumption by about 20%, while Tele2 believes that smarter AI-based mobile networks can reduce energy consumption in the long term by as much as 30% to 40%, while simultaneously optimizing capacity.

AI RAN Outlook

Operators are not revising their topline growth or mobile data traffic projections upward as a result of AI growing in and around the RAN. Disappointing 4G/5G returns and the failure to reverse the flattish carrier revenue trajectory is helping to explain the increased focus on what can be controlled — AI RAN is currently all about improving the performance/efficiency and reducing opex.

Since the typical gains demonstrated so far are in the 10% to 30% range for specific features, the AI RAN business case will hinge crucially on the cost and power envelope—the risk appetite for growing capex/opex is limited.

The AI-RAN business case using new hardware is difficult to justify for single-purpose tenancy. However, if the operators can use the resources for both RAN and non-RAN workloads and/or the accelerated computing cost comes down (NVIDIA recently announced ARC-Compact, an AI-RAN solution designed for D-RAN), the TAM could expand. For now, the AI service provider vision, where carriers sell unused capacity at scale, remains somewhat far-fetched, and as a result, multi-purpose tenancy is expected to account for a small share of the broader AI RAN market over the near term.

In short, improving something already done by 10% to 30% is not overly exciting. However, suppose AI embedded in the radio signal processing can realize more significant gains or help unlock new revenue opportunities by improving site utilization and providing telcos with an opportunity to sell unused RAN capacity. In that case, there are reasons to be excited. But since the latter is a lower-likelihood play, the base case expectation is that AI RAN will produce tangible value-add, and the excitement level is moderate — or as the Swedes would say, it is lagom.