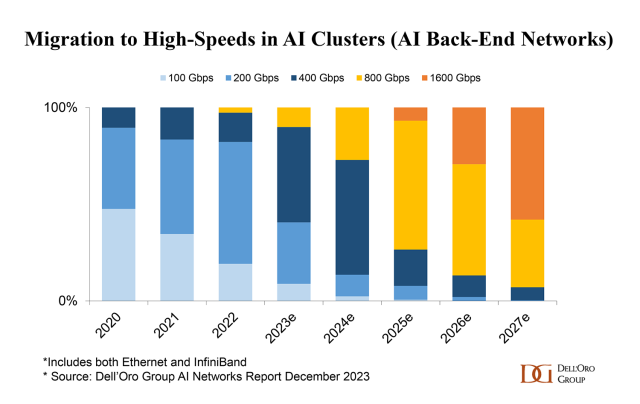

With a wave of announcements coming out of GTC, countless articles and blogs have already covered the biggest highlights. Rather than simply rehashing the news, I want to take a different approach—analyzing what stood out to me from a networking perspective. As someone who closely tracks the market, it’s clear that AI workloads are driving a steep disruption in networking infrastructure. While a number of announcements at GTC25 were compute related, NVIDIA made it clear that implementations of next generation GPUs and accelerators wouldn’t be made possible without major innovations on the networking side.

1) The New Age of AI Reasoning Driving 100X More Compute Than a Year Ago

Jensen highlighted how the new era of AI reasoning is driving the evolution of scaling laws, transitioning from pre-training to post-training and test-training. This shift demands an enormous increase in compute power to process data efficiently. At GTC 2025, he emphasized that the required compute capacity is now estimated to be 100 times greater than what was anticipated just a year ago.

2) The Network Defines the AI Data Center

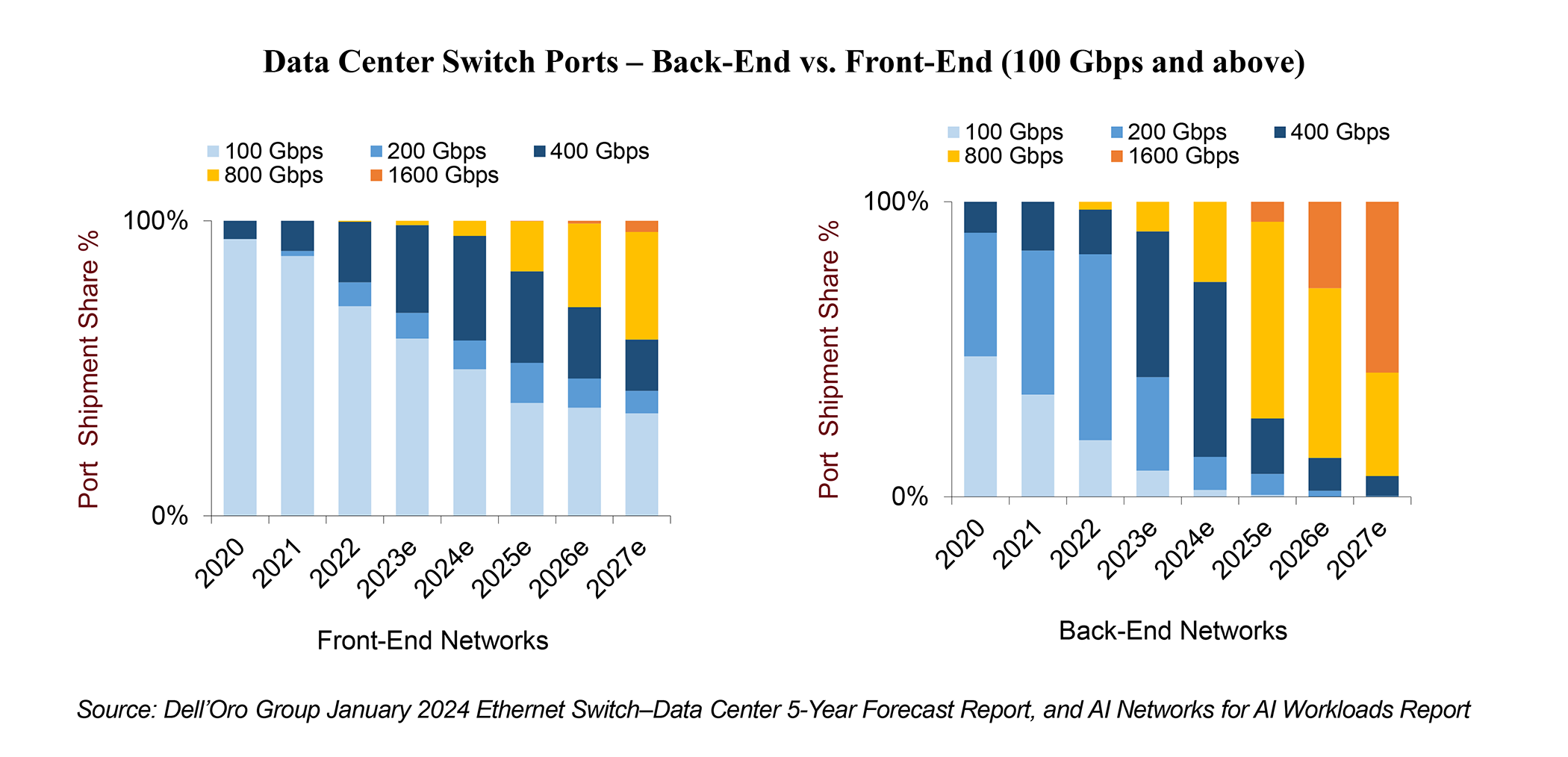

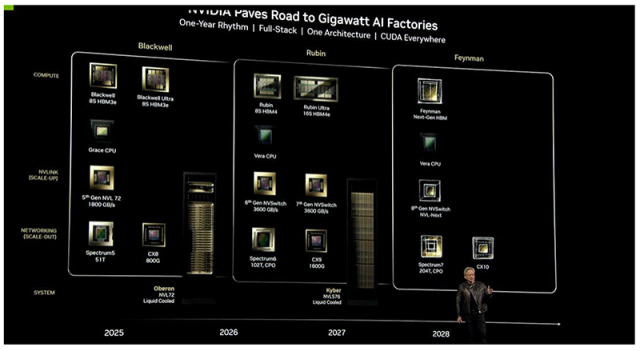

The way AI compute nodes are connected will have profound implications on efficiency, cost, and performance. Scaling up, rather than scaling out, offers the lowest latency, cost, and power consumption when connecting accelerated nodes in the same compute fabric. At GTC 2025, NVIDIA unveiled plans for its upcoming NVLink 6/7 and NVSwitch 6/7, key components of its next-generation Rubin platform, reinforcing the critical role of NVLink switches in its strategy. Additionally, the Spectrum-X switch platform, designed for scaling out, represents another major pillar of NVIDIA’s vision (Chart). NVIDIA is committed to a “one year-rhythm”, with networking keeping pace with GPU requirements. Other key details from NVIDIA’s roadmap announcement also caught our attention, and we are excited to share these with our clients.

3) Power Is the New Currency

The industry is more power-constrained than ever. NVIDIA’s next-generation Rubin Ultra is designed to accommodate 576 dies in a single rack, consuming 600 kW—a significant jump from the current Blackwell rack, which already requires liquid cooling and consumes between 60 kW and 120 kW. Additionally, as we approach 1 million GPUs per cluster, power constraints are forcing these clusters to become highly distributed. This shift is driving an explosion in the number of optical interconnects, both intra- and inter-data center, which will exacerbate the power challenge. NVIDIA is tackling these power challenges on multiple fronts, as explained below.

4) Liquid-Cooled Switches Will Become a Necessity, Not a Choice

After liquid cooling racks and servers, switches are next. NVIDIA’s latest 51.2 T SpectrumX switches offer both liquid-cooled and air-cooled options. However, all future 102.4 T Spectrum-X switches will be liquid-cooled by default.

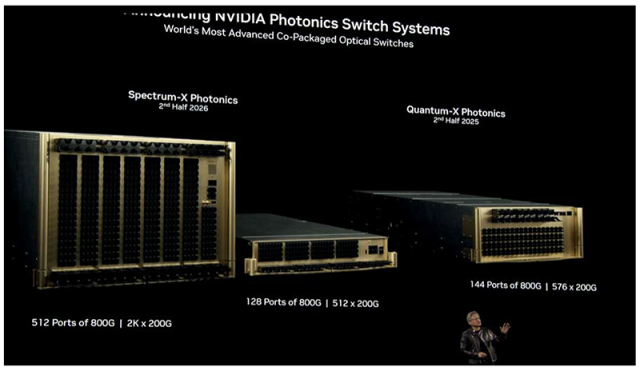

5) Co-packaged Optics (CPO) in Networking Chips Before GPUs

Another key reason for liquid cooling racks is to maximize the number of GPUs within a single rack while leveraging copper for short-distance connectivity—”Copper when you can, optics when you must.” When optics are necessary, NVIDIA has found a way to save power with Co-Packaged Optics (CPO). NVIDIA plans to make CPO available on its InfiniBand Quantum switches in 2H25 and on its Spectrum-X switches in 2H26. However, NVIDIA will continue to support pluggable optics across different SKUs, reinforcing our view that data centers will adopt a hybrid approach to balance performance, efficiency, and flexibility.

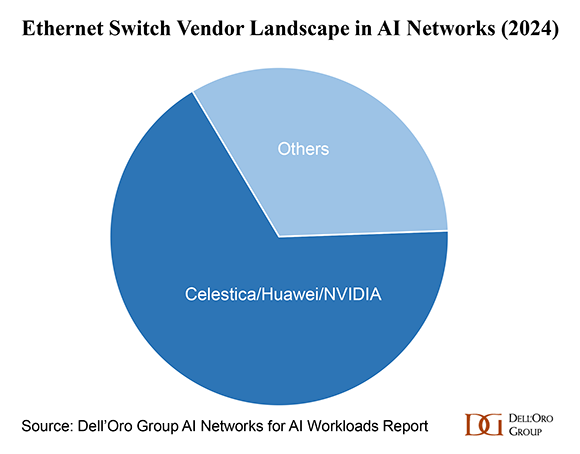

6) Impact on Ethernet Switch Vendor Landscape

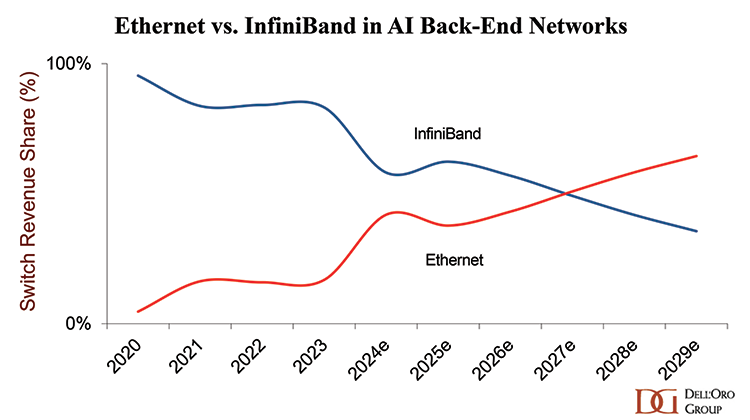

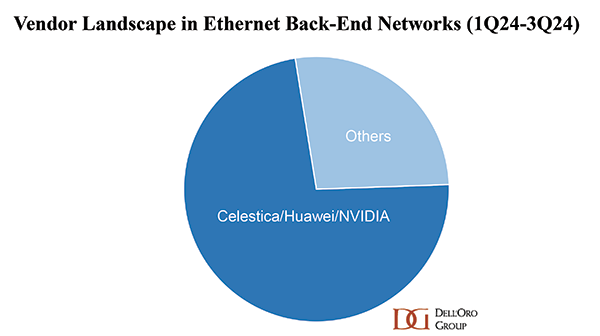

According to our AI Networks for AI Workloads report, three major vendors dominated the Ethernet portion of the AI Network market in 2024.

However, over the next few years, we anticipate greater vendor diversity at both the chip and system levels. We anticipate that photonic integration in switches will introduce a new dimension, potentially reshaping the dynamics of an already vibrant vendor landscape. We foresee a rapid pace of innovation in the coming years—not just in technology, but at the business model level as well.

Networking could be the key factor that shifts the balance of power in the AI race and customers appetite for innovation and cutting-edge technologies is at an unprecedented level. As one hyperscaler put it during a panel at GTC 2025: “AI infrastructure is not for the faint of heart.”

For more detailed views and insights on the AI Networks for AI Workloads report, please contact us at dgsales@delloro.com.