As 2025 comes to a close, we reflect on several remarkable milestones achieved by the data center switching market this year, and what 2026 may have in store for us.

Looking back at 2025, several clear inflection points reshaped the market:

- Ethernet overtakes InfiniBand in AI back-end networking: Supported by strong tailwinds on both the supply and demand sides, 2025 marked a decisive turning point for AI back-end networks, as Ethernet surpassed InfiniBand in market adoption. This shift is particularly striking given that just two years ago, InfiniBand accounted for nearly 80% of the data center switch sales in AI back-end networks.

- Overall Ethernet Data Center Switch sales nearly doubled compared with 2022: The rapid adoption of Ethernet in AI back-end deployments propelled total Ethernet data center switch sales to an all-time high in 2025, nearly doubling annual revenues compared with 2022 levels.

- 800 Gbps well surpassed 20 M ports within just three years of shipments: As a point of reference, it took 400 Gbps six to seven years to achieve the same milestone

- The vendor landscape shifted meaningfully toward AI-exposed players: Vendors with greater exposure to AI back-end networking significantly outperformed the broader market in 2025. Companies such as Accton, Celestica and NVIDIA were among the primary beneficiaries of this shift, reflecting how AI-driven demand is reshaping competitive dynamics. Arista maintained the leading position in the Total Ethernet Data Center Switching market.

Looking ahead to 2026, questions are emerging around whether the pace of investment can be sustained after such an extraordinary year. While skepticism around AI returns on investment is growing, we believe the industry is still in the early innings of a multi-year AI investment cycle. Based on the latest capital expenditure outlooks from the large hyperscalers (Google, Amazon, Microsoft, Meta, Oracle and others), we expect another strong year of AI-related investment in 2026, which should continue to drive robust spending across the networking portion of the infrastructure stack.

Networking is becoming increasingly critical, as it plays a central role in addressing some of the most challenging scaling bottlenecks in AI deployments—including power availability and compute demand. Below are some of the inflection points expected for 2026:

- Demand remains exceptionally strong in AI back-end networking. We continue to expect strong double-digit growth in AI networking spending, driven by ongoing scale-out of AI clusters. The integration of co-packaged optics could further accelerate market growth as optics would easily add multi billions to the market size.

- Supply constraints remain the primary risk to our forecast. We expect demand to continue to outpace supply, with shortages in chips, memory, and other critical components representing the main caveats to our outlook. As a result, the market remains supply-constrained rather than demand-constrained—a challenging dynamic, but ultimately a more favorable one than the reverse.

- Scale-up emerges as a new battlefield for Ethernet. After securing a leading position in the scale-out segment of AI back-end networks, Ethernet is now expanding into scale-up, where NVLink has historically dominated. In this space, Ethernet will compete not only with NVLink but also with UALink, another alternative to NVLink. We anticipate 2026 will be a year full of vendor announcements targeting both Ethernet and UALink opportunities in scale-up. Scale-up represents what could be the largest total addressable market expansion the industry has ever seen.

- 1.6 Tbps switches expected to ship in volume in 2026. 2026 will mark the first year of volume deployments of 1.6 Tbps switches, driven by the insatiable demand for high bandwidth in AI clusters. 1.6 Tbps ramp is expected to be even faster than 800 Gbps, surpassing 5 M ports within one to two years of shipments.

- Co-packaged optics (CPO) expected to ramp on both InfiniBand and Ethernet switches. After many years of development and debate, 2026 is expected to see the initial volume ramp of CPO on both InfiniBand and Ethernet switches. On the demand side, major hyperscalers are actively trialing the technology. On the supply side, while NVIDIA is leading the way, we expect other vendors to follow shortly.

- Vendor diversity set to increase in 2026. As AI clusters continue to scale, vendor diversity with both incumbent vendors as well as new entrants, will become increasingly important to ensure risk mitigation and supply availability. We believe that no single vendor can meet the full demand for AI infrastructure. As a result, we expect SONiC adoption to accelerate in both scale-up and scale-out deployments, as it will be critical in enabling this broader vendor ecosystem

In summary, as we look ahead to 2026, the AI-driven data center landscape is set to continue its rapid evolution. From Ethernet’s rise in AI back-end networks and the emergence of scale-up as a new battlefield, to the adoption of 1.6 Tbps switches, co-packaged optics, and a more diverse vendor ecosystem, the infrastructure supporting AI is expanding in both scale and complexity. While supply constraints and ROI questions remain challenges, the industry is clearly in the early innings of a multi-year AI journey. Networking, in particular, will play a pivotal role in enabling the next phase of AI growth, making 2026 an exciting year for both innovation and investment.

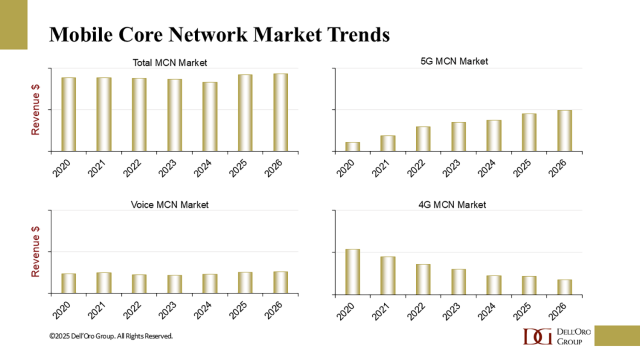

5G Standalone network rollouts have reached a critical mass in market maturity with high population coverage in 40 countries, seeding the market for more subscribers to jump on the 5G Standalone networks, experiencing higher downlink and uplink speeds, and inherently lower latency provided by 5G SA, fueling the 5G MCN market.

The total MCN market low point in revenue was 2024, and the market is projected to reach a historic high in 2026, since the beginning of the 5G era in 2020. Besides more subscribers migrating to 5G SA networks, new applications are becoming more scalable with the advent of Common Network Programmable APIs. Enterprise applications are expected to rise, fueling demand for more MCN capacity, especially at the edge on enterprise premises.

Another factor that will boost growth is the growing use of agentic AI. As agents work in the background on behalf of subscribers 24/7, the demand for more MCN capacity will increase.

In addition, the Voice MCN market will reach new revenue highs in 2026. The low point for the Voice MCN market was 2023. Feeding the trend to higher revenues are more networks migrating from Circuit Switched Core to IMS Core. These are primarily located in countries where MNOs are modernizing their Voice Core in their 4G LTE networks. Recently, we have seen upgrades to 5G VoNR (Voice over New Radio), which utilize the IMS Core and a new generation of Cloud-Native IMS Cores, providing a more scalable and flexible network to support new services. Enhanced immersive and interactive voice calls are enabled in 5G VoNR networks, and the introduction of the IMS Data Channel will provide competitive features to over-the-top applications, utilizing the smartphone’s native dialer.

Even though the 4G MCN market will decline as more subscribers migrate to 5G SA, the rate of decline is decreasing, driven by subscriber growth in countries that do not yet have 5G SA. Some MNOs are sunsetting their 3G MCNs, which increases demand for 4G MCN capacity.

In summary, we expect continued revenue growth in MCNs in 2026 as more 5G SA networks come on line as more subscribers are added to existing 5G SA networks, the rise of more enterprise applications utilizing Common Network APIs, the growing use of Agentic AI, enhanced immersive and interactive calling for the Voice networks, and 4G network decline in growth rates are adding up to an all-time high in MCN revenues in 2026.

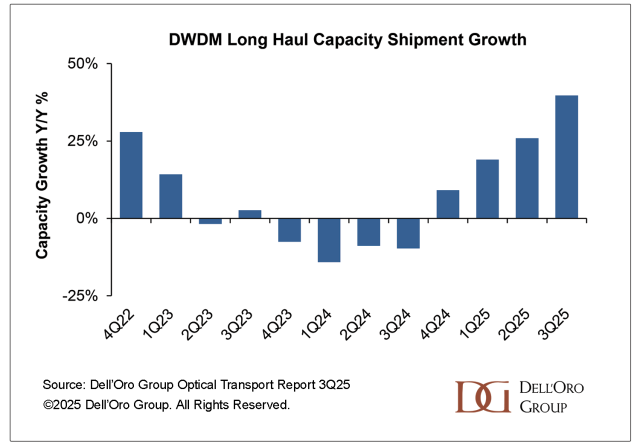

In the most recent 3Q25 market study, the Optical Transport market posted a 15% year-over-year (Y/Y) gain, moving us to raise our full-year outlook for 2025 and 2026.

It was more than just the market’s growth rate that led us to raise our forecast; it was the bandwidth. What I mean is that network capacity demand or bandwidth was back on the rise after two years of stalling. Here is a chart on DWDM Long Haul capacity shipment growth on a Y/Y basis for the past 3 years. As shown, new installations on backbone networks grew at a rate below the historical average of 25% to 30% for 9 quarters. This changed in the middle of 2025, and growth rates are now back above 25%.

Data center interconnect (DCI) accounted for most of the bandwidth growth over the past year, driven by large deployments from cloud providers. This trend is expected to continue through 2026 and remain a key market driver. However, it will now expand beyond traditional DCI. The new outlook suggests that the largest cloud providers are nearing a performance ceiling in some geographies due to power grid limitations. The good news is that a solution exists: scaling across multiple data centers to create a larger virtual AI factory.

Hence, we believe that, beginning in 2026, cloud providers will expand their AI data centers across multiple buildings about 100 km apart, requiring 800 ZR+ optics and optical line systems (OLSs) to tap into different electricity grids to run their power-hungry GPU compute clusters.

The optical equipment of choice for building new DCI networks, including for scale-across, will likely remain Disaggregated WDM, which accounted for nearly 40% of total Optical Transport market revenue during the first nine months of 2025 (the other 60% of revenue was mainly from large integrated systems). Also, as many of you know, the idea of disaggregating the WDM network originated with cloud providers.

For those unfamiliar with what we call Disaggregated WDM, here is a description: Disaggregated WDM is a product and architecture that promotes the independence of the main elements in a WDM network—transponders and optical line systems. As transponder technology continuously improved and reduced in size, the natural progression was to sell these subsystems as optical pluggable modules for use in WDM systems, routers, and switches. Additional factors that characterize Disaggregated WDM include open interfaces to eliminate vendor lock-in and small form-factor chassis to better align with a pay-as-you-grow model. We track the Disaggregated WDM market in the following major categories:

- Transponder Units: Compact form factor that mainly houses the embedded or pluggable WDM transponders and is used in long-haul and metro deployments.

- Optical Line Systems: Small chassis that mainly houses the amplifier (EDFA and/or RAMAN), optical add/drop multiplexer (OADM), and mux/demux.

- IPoDWDM ZR/ZR+: In an IPoDWDM architecture, the pluggable WDM transceiver is placed in a router or Ethernet switch rather than a Transponder Unit. We account for the ZR/ZR+ optical plug portion in Disaggregated WDM.

Alongside DCI, we expect the positive trend among communication service providers (CSPs) to continue into 2026. In the third quarter of 2025, non-DCI revenue for DWDM Long Haul rose 14% Y/Y, indicating that demand for network backbone capacity goes beyond just cloud providers and AI expansions. We believe this non-DCI growth is particularly significant because it suggests that CSPs’ inventory correction is complete and their network bandwidth is starting to grow again. This likely means that CSPs will purchase even more optical transport equipment in 2026.

We have an optimistic outlook for 2026 and believe that the Optical Transport market will build on the positive momentum in 2025. We are eagerly looking forward to witnessing this continued growth and development unfold in the coming months and years.

The hyperscale AI infrastructure buildout is entering a more mature phase. After several years of rapid regional expansion driven by resilience, redundancy, and data sovereignty, hyperscalers are now focused on scaling AI compute and supporting infrastructure efficiently. As we move into 2026, the cycle is increasingly defined by capex discipline and execution risk, even as absolute investment levels remain historically high.

Accelerated Servers Remain the Core Spending Driver

Spending on high-end accelerated servers rose sharply in 2025 and continues to anchor AI infrastructure investment heading into 2026. These platforms pull through demand for GPUs and custom accelerators, HBM, high-capacity SSDs, and high-speed NICs and networks used in large AI clusters. While frontier model training remains important, a growing share of deployments is now driven by inference workloads, as hyperscalers scale AI services to millions of users globally.

This shift meaningfully expands infrastructure requirements, as inference workloads require higher availability, geographic distribution, and tighter latency guarantees than centralized training clusters.

GPUs Continue to Dominate Component Revenue

High-end GPUs will remain the largest contributor to component market revenue growth in 2026, even as hyperscalers deploy more custom accelerators to optimize cost, power efficiency, and workload-specific performance at scale. NVIDIA is expected to begin shipping the Vera Rubin platform in 2H26, which increases system complexity through higher compute and networking density and optional Rubin CPX inference GPU configurations, materially boosting component attach rates.

AMD is positioning to gain share with its MI400 rack-scale platform, supported by recently announced wins at OpenAI and Oracle. Despite growing competition, GPUs continue to command outsized revenue due to higher ASPs, broader ecosystem support.

Near-Edge Infrastructure Becomes Critical for Inference

As AI inference demand accelerates, hyperscalers will need to increase investment in near-edge data centers to meet latency, reliability, and regulatory requirements. These facilities—located closer to population centers than centralized hyperscale regions—are essential for real-time, user-facing AI services such as copilots, search, recommendation engines, and enterprise applications.

Near-edge deployments typically favor smaller but highly dense accelerated clusters, with strong requirements for high-speed networking, local storage, and redundancy. While these sites do not approach the power scale of centralized AI campuses, their sheer number and geographic dispersion represent a meaningful incremental capex requirement heading into 2026. In contrast, far-edge deployments remain more use-case dependent and are unlikely to see material growth until ecosystems and application demand further mature.

Networking and CPUs Transition Unevenly

The x86 CPU and NIC markets tied to general-purpose servers are expected to decelerate in 2026 following short-term inventory digestion. In contrast, demand for high-speed networking remains tightly linked to accelerated compute growth. Even as inference workloads outpace training, inference accelerators continue to rely on scale-out fabrics to support utilization, redundancy, and ultra-low latency.

Supply Chains Tighten as Component Costs Rise

AI infrastructure supply chains are becoming increasingly constrained heading into 2026. Memory vendors are prioritizing production of higher-margin HBM, limiting capacity for conventional DRAM and NAND used in AI servers. As a result, memory and storage prices are rising sharply, increasing system-level costs for accelerated platforms.

Beyond memory, longer lead times for advanced substrates, optics, and high-speed networking components are adding further volatility to the supply chain. In parallel, tariff uncertainty and evolving trade policy introduce additional supply-chain risk, and potentially elevating component pricing over the medium term.

Capex Remains Elevated, but ROI Scrutiny Intensifies

The US hyperscale cloud service providers continue to raise capex guidance, reinforcing the continuity of the multi-year AI investment cycle into 2026. Accelerated computing, greenfield data center builds, near-edge expansion, and competitive pressures remain strong tailwinds. Changes in depreciation treatment provide levers to optimize cash flow and support near-term investment levels.

However, infrastructure investment has outpaced revenue growth, increasing scrutiny around capex intensity, depreciation, and long-term returns. While cash flow timing can be managed, underlying ROI depends on successful AI monetization, increasing the risk of margin pressure if revenue growth lags infrastructure deployment.